Have Robots Taken My Job?

A perspective on the impact of AI methodology for predicting protein structures from an experimental protein scientist By Dr Steven Harborne

The function of a protein is dependent on its three-dimensional structure. Considering that all proteins are formed of the same 20 amino acid building blocks, the huge number of different functions proteins can adopt is determined by how that string of amino acids folds into its final three-dimensional shape. One of our jobs as protein scientists and crystallographers at Peak Proteins is the experimental determination of protein structures in order to understand function or to assist in the design of therapeutic molecules against diseases. However, this experimental approach can be a long and difficult process, and no guarantees can be made on success. The scientific community already holds vast amounts of information about protein amino acid sequences thanks to sequencing initiatives such as the human genome project. Therefore, if we could predict how a protein was going to fold and what structure would form from a defined sequence of amino acids alone, there would be no need for us to pick-up a pipette and do an experiment at all!

The history of predicting protein structures

Predicting protein structure from sequence is far from a new concept, and many scientists have dedicated their careers in pursuit of this exact goal, generating methods to analyse protein sequences, studying protein structures and creating computer algorithms to try and fold sequences into structures. On the face of it, using a computer to sample lots of conformations that a string of amino acids could adopt and work out which one has the lowest free energy (most likely to be correct), seems simple enough. However, it was proposed in 1969 that a typical protein could adopt more than 10300 different conformations – more atoms than are estimated to exist in our galaxy. Thus, brute-force calculations of the problem are not an option. Instead, a number of different strategies have emerged, most of which rely on information that can be extracted from existing experimentally determined protein structures, which have been painstakingly collected and catalogued in the publicly funded and accessible Protein Data Bank repository. Approaches that have been used include template-based modelling, threading, ab initio modelling, contact prediction or even crowd sourcing!

In 1994, a total of 35 different groups of scientists came together to test the algorithms they had built and to try and understand how well their computer programmes could perform on completely new protein sequences. Importantly, the experiment took three parts: the collection of targets for prediction from the community where structures were solved but unpublished or soon to be solved experimentally, the collection of predictions from the modelling community, and the assessment and discussion of the results. This was the first ever Community Wide Experiment on the Critical Assessment of Techniques for Protein Structure Prediction (CASP). A competition if you will.

Artificial intelligence: the new kid on the block

Fast forward 26 years from the first ever CASP and the 14th edition has just been held with 215 competitors entering. This time, there was a lot of excitement around one particular group’s programme: AlphaFold2. After only the second year of entering, AlphaFold2 has completely blown away competitors. The group behind this programme is none other than Google’s DeepMind, the same people who brought us AlphaGo and AlphaStar; computer programmes that beat world champion human players at board games or competitive online computer games, respectively. Therefore, with that kind of backing, it’s hardly a surprise that AlphaFold2 did so well, and unlike the previously two mentioned programmes that were largely publicity stunts, AlphaFold2 promises to make a disruptive impact on how we think about using protein models in scientific research. This last point is illustrated perfectly by the fact that a number of the proteins entered into this edition of CASP’s test set were sequences from SARS-CoV-2, a virus that none of us had heard of until the start of 2020.

Fundamentally, the approaches AlphaFold2 takes are not so different from the other modern programmes that were competing at CASP14. So, the secret behind the success? It is the implementation of artificial intelligence (AI), otherwise known as machine learning or neural networks, into the process. In other programmes, decisions on what to do with pieces of information, although automated, have been devised by a human and based on their understanding of the problem and how to solve it – although, since the appearance and success of the original AlphaFold in CASP13 other groups have begun to develop their own neural network based approaches.

So how was AlphaFold2’s AI developed? DeepMind refer to their method as a deep neural network, trained by supervised learning and reinforcement learning. Essentially, the naïve AI is shown a huge number of examples of what a protein structure with a given amino acid sequence should look like (~170,000 examples from the PDB) in a language it can understand, a ‘spatial graph’, and allowed to build up a complex set of rules through repetitive iterations of learning and testing. Once trained, the AI can use its set of rules to predict how an amino acid sequence it’s never seen before will fold into a protein structure. The developers say it takes the mere equivalent of 100-200 high end desktop computers run over a few weeks to crunch these calculations, a far greater resource than could be afforded by the best funded academic research groups, but fairly modest when compared to the kinds of compute power required to run Google’s search engine or allow Netflix to serve up everyone’s entertainment to their homes.

Shall I hang up my lab coat now?

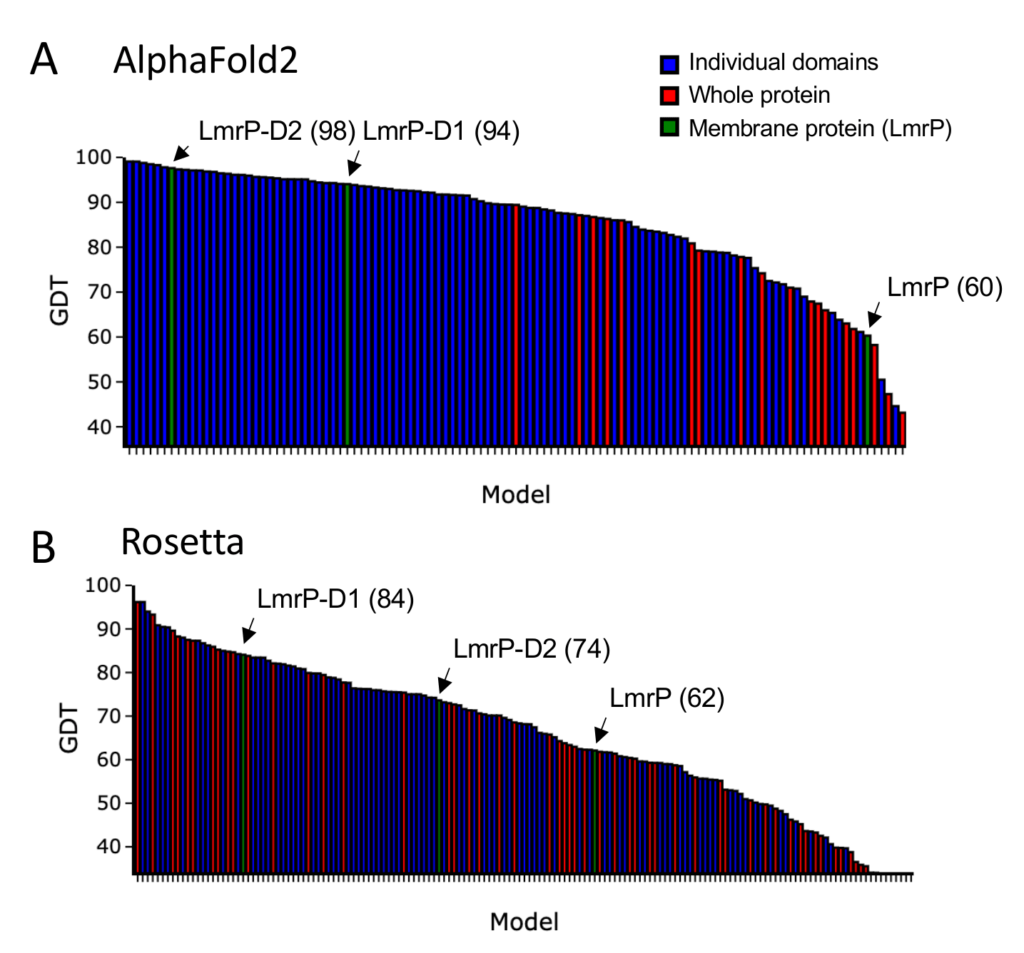

There is no denying that AlphaFold2’s performance is impressive, but is the problem ‘mostly solved’ as claimed by DeepMind’s PR department? AlphaFold2 predicts protein structures that score extremely highly when measured using the method devised by CASP, certainly enough so that the organises have declared that the 50-year old challenge has been completed. The method CASP uses to evaluate predicted models is a Global Distance Test (GDT) score, which is calculated as the percentage of carbon atoms in the backbone of the model being within a defined distance of the matching atoms in the experimentally determined structure. Models would be given a perfect score of 100 if all backbone carbon atoms fall less than 1 Å away from the experimentally determined structure. As a rule of thumb, models with scores above 60 are considered correctly folded, with losses in scoring largely due to incorrectly modelled loops or small portions of the protein. Impressively, AlphaFold2 scored 99 for its best target, and an average of 85 over all targets it modelled. The next best competitor (Rosetta from the Baker lab), scored a maximum of 96 and an average of 65, still a very commendable effort.

But wait, not so fast! DeepMind’s PR department and the organisers of CASP may be satisfied that this problem is solved, but as one of the people potentially put out of a job by our robot friends, my criteria are somewhat more exacting… A high GDT score may mean all backbone atoms have been correctly placed, but this doesn’t necessarily mean side chain atoms are well placed. Furthermore, the models that are generated by any approach will only be useful if they can assist us in the real world to extract information about function that was previously unknown, for example how a protein behaves in a disease state, or if the models can be used as the basis for onward research, for example in the drug discovery process.

Performance of AlphaFold2 versus Rosetta in CASP14 – Bar chart of GDT scores for A) AlphaFold2 or B) Rosett, ordered by score. Individual domains are coloured blue, whole proteins are coloured red and the membrane protein LmrP is highlighted in green.

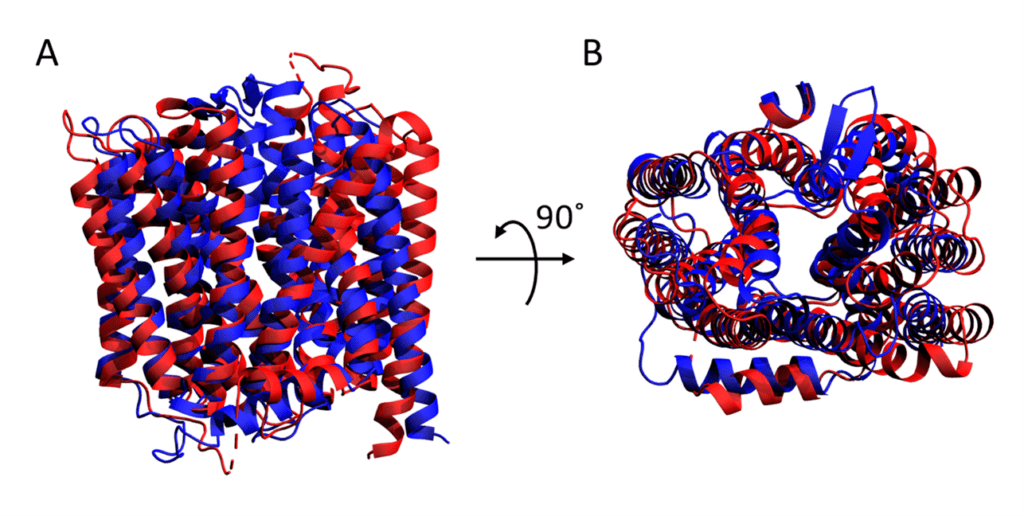

With this in mind, let’s take a closer look at some of the results of the competition. It is important to note that the target proteins in the test set were considered not only as a whole, but also as individual domains. When the AlphaFold2 results are ranked by GDT score, it is clear that the individual domains score better than when the whole protein is considered (Figure 1A). Thus, AlphaFold2 is excellent at local structural features, but less good at a global level over several protein domains. This is particularly evident for the membrane protein LmrP in the test set, which represents one of the most challenging classes of protein. LmrP is a membrane spanning transporter protein formed of a pseudo-dimeric repeat of domains 1 and 2 (D1 and D2). The individual domains were modelled extremely well alone by AlphaFold2 scoring 94 and 98, respectively. However, as a whole, AlphaFold2 produced a model for LmrP that only scored 60 indicating that it did not match the experimental model very well (Figure 2), and was one of the worst scores AlphaFold2 achieved. In comparison, models produced by Rosetta perform less well overall, but there is less of a clear trend toward modelling of individual domains (Figure 1B), and in fact one of the most accurate predictions it made was of a whole protein and scored similarly to AlphaFold2 for modelling the entire LmrP (62).

Modelling of LmrP by AlphaFold2 – Cartoon representation of the experimental crystal structure of LmrP (red) superimposed with the model predicted by AlphaFold2 (blue) as viewed from the A) front and B) top.

You may not think this is so troubling as each domain can be well modelled, however, I would argue that this is a very good reason why we’re not done with experimental work just yet. Particularly in the case of LmrP, the substrate binding site involved in the function of the protein lies between the two domains and for this class of protein this is also the site at which small molecule pharmacophores would be targeted. You may also argue that membrane proteins are flexible and exist in numerous different folded states, and so the experimental structure and the modelled structure are both correct, they just represent different states. However, this then begs the question of how AlphaFold2 can deal with flexibility in proteins and multiple protein states in general. In fact, how would AlphaFold2 deal with intrinsically disordered proteins, or proteins that only become folded once bound to a partner protein? How about protein complexes? If individual domains of the same protein cannot be well accounted for, we probably won’t have much faith that an entire ribosome or respiratory complex can be correctly modelled. I suspect instead, a main reasons membrane proteins will continue to be a source of difficulty for modelling programmes, is because their membrane environment that plays an extremely important role on protein fold is not considered during the modelling process. Finally, let’s go back to the GDT score and amino acid side-chain positions. In a typical drug discovery campaign that uses structure-based drug design, the exact position where the molecule binds and how the molecule interacts with the protein are critical pieces of information. The formation or breakage of a couple of hydrogen bonds can be the difference between a nanomolar affinity lead compound and just another dead end that falls by the wayside. For these types of interactions, even errors in the side chains less than 1 Å could completely alter the analysis rendering the model useless rather than predictive. The ideal starting point would instead be a high resolution experimentally determined structure with the lead compound bound, and could be used as a template for improvement. At the moment, there is still no way the current modelling programmes can provide this level of accuracy, particularly in regards to small molecule binding (which is a completely separate problem with its own difficulties aside from modelling the protein correctly). Of course, this also ignores the fact that many drug discovery campaigns have been, and will be, successful in the absence of any protein structures at all!

Can robots and humans be friends?

So, the robots haven’t taken my job (yet), but that’s not to say AlphaFold2 won’t change the way we work. Prior knowledge of a protein structure, even if not perfectly modelled, can be extremely useful for experimental determination of protein structures. For example, in X-ray crystallography, phase data is required in order to turn a set of diffraction data into an electron density map for model building. Phases are not captured in a standard experiment, and to determine them experimentally requires a number of extra steps. Most of the time in modern crystallography, these phases can be ‘borrowed’ from a related structure that has already been solved and deposited in the PDB. With the improvements AlphaFold2 brings to structure prediction, in the cases where no suitable related protein structure has been solved and available in the PDB, well modelled individual domains would provide an excellent source of borrowed phases allowing quick and easy phasing of novel experimental data. This could turn experimental phasing from something that is not often done, to never done, markedly speeding up a step that can sometimes be a serious bottleneck. Another example of where predicted models could help is when analysing low resolution or noisy data from cryoEM experiments. Even before AlphaFold was on the scene, protein models from prediction software were docked into cryoEM density and used as the starting point from which the model could be improved toward the experimental data rather than building what are often large protein complexes from scratch by hand.

Of course, these uses rely on the AlphaFold2 developers either releasing all details of the methodology for others to improve their own approaches, releasing the code for others to use (hardware requirement dependent), or at the least providing an API or server that could be used by the community to predict their own favourite protein. It remains to be seen though, as the inner workings of Google’s DeepMind will be considered a highly valuable trade secret, and any code strict intellectual property. We can only hope that they are willing to contribute back to the community that have supported their latest endeavour through the diligent collection and cataloguing of the protein sequences and structures to the willingness to share open access code that many of the protein prediction programmes rely on.

Until robots have sorted all of your protein supply or structure determination needs, Peak Proteins is still here to provide you with an expert resource needs, please get in touch at info@peakproteins.com.